Insurance companies have access to huge amounts of data, but this sector has not been one of the first to leverage its potential.

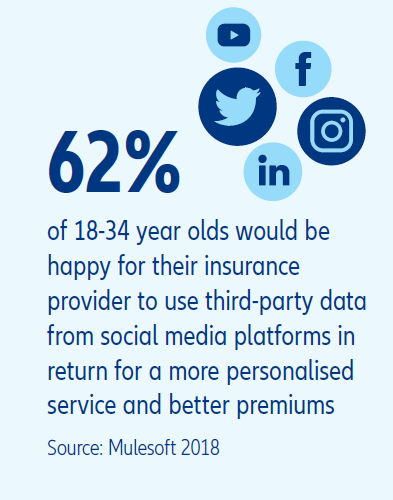

Data comes from myriad sources (including policy and claims records, social media, credit reference agencies, equipment sensors) but data on its own is useless. It only becomes effective once processed, analysed and/or modelled, and then used for a specific purpose. Making sense of so much information and using it in a timely manner (often near real-time) requires specialist infrastructure and skills. And the skills required are broad – they include IT skills, data science skills and legal/ regulatory expertise.

Companies which are able to effectively exploit data can reap diverse benefits, ranging from optimised underwriting to tailored proposition development, better customer segmentation and fraud reduction. Big Data presents huge opportunities for companies to gain competitive advantage, but attention must be paid to the following key subjects: consumer privacy, regulation and data ethics